What this site is and how it came to be?

I’ve wanted to start a blog for a good while. My main goal is to solidify what I learn from different topics, whether that be from technology or Zen-buddhism. Although it’ll probably be mostly about technology. Teaching is often the best way to learn, so my main goal is to learn more and faster.

Since those are the “business goals”, let’s talk about some technical goals. I’m mostly looking for this site to not cost much money (I’m okay with $1-$10/month). I’d like to use AWS, as that is the cloud provider I’m most familiar with and I want to get started quick. I want the architecture to be simple and performant. Security is also important, as I don’t want to be a target for a DoWNet attack. So, the main goals are:

- Security

- Performance

- Low cost

- Simplicity

Secure static site from AWS

So just by googling those keywords it’s easy enough to find this solution from AWS: Use an Amazon CloudFront distribution to serve a static website . This solution hits pretty much all the main points. I later describe what changes I made to the template in the section about AWS .

To make the static site, I decided to use Hugo . No real reason for this choice, as I’m sure there would’ve been many different options that suite my criteria. Mainly I wanted some framework that supports markdown content, builds into static files and has some templating supported (in Hugo those are shortcodes , partials and templates ).

Architecture diagram

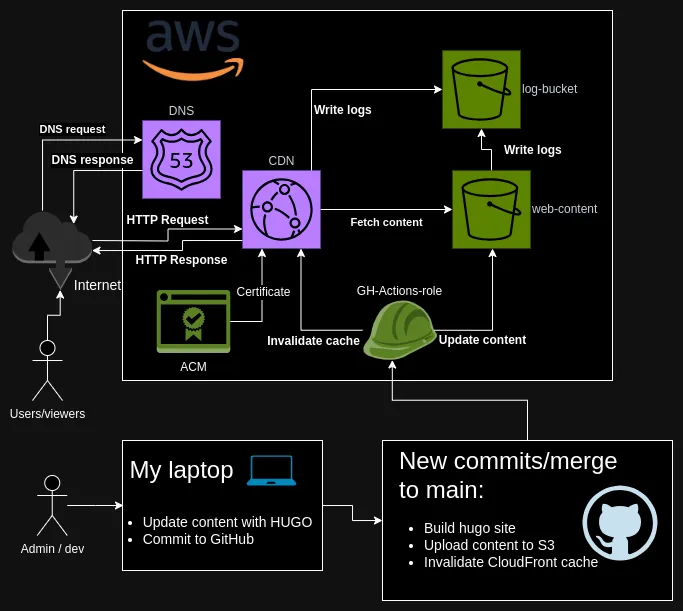

The architecture diagram

Here is the entire architecture pictured. There is more detail to each piece and I’ll do my best to explain those more below. The main flows and areas are:

- The development workflow is really just creating new markdown files and pushing a commit to Github (My laptop)

- Github actions automatically update the content in S3 and invalidates cache in CloudFront, so that new content is immediately visible (Github)

- Users can access the site through CloudFront, content is stored in S3, SSL/TLS certificate from ACM and DNS management in Route 53 (AWS)

I decided to use only the North American and European cache locations, since I’m not expecting much traffic from elsewhere. DNS records are stored in AWS Route 53 and an automatically renewing SSL/TLS certificate is used from AWS Certificate Manager. Direct access to S3 is denied, although that isn’t fully necessary. I know there is at least this one , but it doesn’t really apply in this case, as there shouldn’t ever be any large files hosted in S3.

AWS

AWS side of things is pretty simple. There is a S3 bucket, where the content is hosted. This isn’t publicly available. Instead, only the CloudFront distribution can access the S3 bucket. This should give some extra security, as noone can bypass the CloudFront cache to cause extra network traffic out of the S3 bucket. S3 and CloudFront are also logging to another S3 bucket, in case I there are any issues that need further looking into.

Everything is deployed using a CloudFormation -template. I used the template from here , only making some minor changes to it. Changes include:

- I changed it to take in a Certificate ARN, instead of creating one (I have one cert for

thezencode.comand*.thezencode.com. I’ll be reusing that certificate, in case I’ll host other projects on AWS at a later time) - I changed it so that the stack can be deployed elsewhere. I like to have all my resources at

eu-west-1, so I wanted to have my S3 bucket there as well - I also created an IAM role that GitHub actions can assume.

The IAM role is done with the OIDC -integration between Github and AWS. This was a really simple and secure way to allow access to AWS from GH Actions. The credentials are short lived, and the role is configured with minimum permissions required for the actions that Github needs. This includes access to the S3 content root -bucket and the ability to invalidate the CloudFront cache, whenever a new version of the site is deployed.

CI/CD

I wanted to have an easy process for updating the content on the site. Currently the process is:

- Run

hugo new contentto create a new.md-file/post - Write the content

- Commit and push to Github

From there, a Github action is triggered that deploys the site and invalidates the cache on CloudFront. The steps in the GH workflow are:

- Checkout code

- Set up hugo

- Use hugo to build site into

/public-dir - Set up AWS credentials

- Upload content to S3 (deleting the files that aren’t in local dir)

- Invalidate CloudFront -cache

And here is the entire .github/workflows/actions.yaml -file:

name: Publish new version

run-name: TheZenCode Blog publish pipeline

on:

push:

branches:

- main

permissions:

id-token: write

contents: read

jobs:

Publish-TheZenCode-Blog:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

with:

submodules: true

fetch-depth: 0

- name: Setup Hugo

uses: peaceiris/actions-hugo@v2

with:

hugo-version: '0.124.1'

extended: true

- name: Build

run: hugo --minify

- name: Configure AWS Credentials

uses: aws-actions/configure-aws-credentials@v4

with:

aws-region: eu-west-1

role-to-assume: arn:aws:iam::<ACCOUNT_ID>:role/blog-publish-github-action

role-session-name: GithubActionDeployBlog

- name: Upload to S3

run: aws s3 sync ${{ github.workspace }}/public s3://<S3_BUCKET_NAME>/ --delete

- name: Invalidate CloudFront cache

run: aws cloudfront create-invalidation --distribution-id <CLOUDFRONT_DISTRIBUTION_ID> --paths "/*"

Conclusion

There are a lot of goals achieved with this set-up:

- Cheap (should be less than $1, depending on traffic)

- Simple and fast set-up

- Security best-practices followed in AWS (Principle of least privilege, temporary credentials, …)

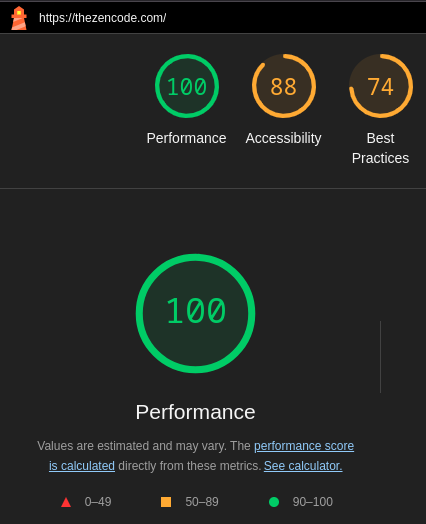

- Performant (Lighthouse score around 100)

Desktop lighthouse score. Can work on accessability and best practices

There are also some aspects which aren’t ideal:

- Publishing new content requires committing code to the repository. This likely wouldn’t work for any clients that aren’t developers themselves, but since I’m the one writing/creating content, that is a drawback I’m okay with.

- Static sites can lack some interactivity. There are options for commenting systems , but I’d like to host something of my own. In case I’ll add comments, I’ll add a follow-up post where I integrate isso , or some other commenting system.

- I had some issues with pages not being found. The issue was that CloudFront / S3 didn’t automatically look for the index.html -document, incase there wasn’t a file specified in the URL. The ‘fix’ (=workaround) for me was to use uglyUrls

Overall I’m really happy with the site and am looking forward to creating posts. Incase someone actually ends up reading this and looks forward to more posts, don’t get your hopes up too much. I’ll only write a post when there is something post-worthy, which could be a while. The KubeCon 2024 post was more of a test-post for me to get the site up and running. Upcoming posts will be more like this one.

If you made it this far, I’m very grateful for your attention. Hopefully you learned something! Till next time!